What is ‘Helicopter Racing League’ ?

Helicopter racing league (HRL) is a fictitious gaming league used as a case study in Google Cloud platform (GCP). The league holds various global and regional racing competitions around the year. It does live streaming of races all over the world. In this case study, we will see how the league leverages upon managed artificial intelligence and machine learning features offered by GCP to increase their global fans engagement.

Why this case study is important?

HRL is one of the four case studies included in the Google Professional Cloud Architect (PCA) certification examination. We can expect maximum of 10 questions based on any two case studies listed in the examination guide.

For tips, resources and more helpful information on PCA exam, please read my blog – Six steps to Google Professional Cloud Architect Certification

Blog

How to approach the solution ?

The solution approach consists of simple four steps method as mentioned in my previous blog in detail.

- Identify GCP products or services based on business and technical requirements.

- Identify the knowledge gaps and do the relevant readings.

- Refer the industry best practices guidelines.

- Draw the solution diagram and discuss it with colleagues for their feedback.

Company overview

Helicopter Racing League (HRL) is a global sports league for competitive helicopter racing. Each year HRL holds the world championship and several regional league competitions where teams compete to earn a spot in the world championship. HRL offers a paid service to stream the races all over the world with live telemetry and predictions throughout each race.

Solution concept

HRL wants to migrate their existing service to a new platform to expand their use of managed AI and ML services to facilitate race predictions. Additionally, as new fans engage with the sport, particularly in emerging regions, they want to move the serving of their content, both real-time and recorded, closer to their users.

Existing technical environment

HRL is a public cloud-first company; the core of their mission-critical applications runs on their current public cloud provider. Video recording and editing is performed at the race tracks, and the content is encoded and transcoded, where needed, in the cloud. Enterprise-grade connectivity and local compute is provided by truck-mounted mobile data centers. Their race prediction services are hosted exclusively on their existing public cloud provider. Their existing technical environment is as follows:

• Existing content is stored in an object storage service on their existing public cloud provider.

• Video encoding and transcoding is performed on VMs created for each job.

Race predictions are performed using TensorFlow running on VMs in the current public cloud provider.

Business requirements

HRL’s owners want to expand their predictive capabilities and reduce latency for their viewers in emerging markets. Their requirements are:

- Support ability to expose the predictive models to partners.

- Increase predictive capabilities during and before races: Race results, Mechanical failures, Crowd sentiment

- Increase telemetry

- Measure fan engagement with new predictions.

- Enhance global availability and quality of the broadcasts.

- Increase the number of concurrent viewers.

- Minimize operational complexity.

- Ensure compliance with regulations.

- Create a merchandising revenue stream.

Products/services Identified:

- Cloud storage buckets for storing season long race data. With object life cycle management policy, the cloud storage helps in cost optimization for companies in longer terms.

- Cloud Dataflow (Apache beam pipelines) for processing the data before storing in BigQuery analytics engine.

- AI Platform (now Vertex AI) to deploy existing TensorFlow ML models and expose them to partners.

- AI – Natural Language Processing for predictions based on crowd sentiments.

- Multi-regional Google Kubernetes Engine cluster for scalability and global presence for production environments.

- Cloud Pub/Sub to ingest raw video streaming files into transcoder API.

- Cloud Monitoring Dashboard to reduce operational complexity and provide application insights.

- Online merchandise portal on GKE to generate merchandising revenue.

- GCP services like Cloud IAM allows administrators to set up user authorization and access to Google Cloud resources.

- GCP products are compliant with below regulations applicable for media business:

- Motion Picture Association (MPA)

- Independent Security Evaluators (ISE)

- MTCS (Singapore) Tier 3

- General Data Protection Regulation (GDPR)

Technical requirements

- Maintain or increase prediction throughput and accuracy.

- Reduce viewer latency.

- Increase transcoding performance.

- Create real-time analytics of viewer consumption patterns and engagement

- Create a data mart to enable processing of large volumes of race data.

Products/services Identified:

- Machine learning (TensorFlow) models are already used by HRL.

- Global Load Balancer along with Content Delivery Network (CDN) to provide content close to users for reduced latency.

- Transcoder API (pre-GA) or App engine (with FFmpeg container for transcoding) for improved transcoding performance.

- BigQuery to store the large volume of race data, serves as data mart along with scalable analytics capability.

Executive statement

Our CEO, S. Hawke, wants to bring high-adrenaline racing to fans all around the world. We listen to our fans, and they want enhanced video streams that include predictions of events within the race (e.g., overtaking). Our current platform allows us to predict race outcomes but lacks the facility to support real-time predictions during races and the capacity to process season-long results.

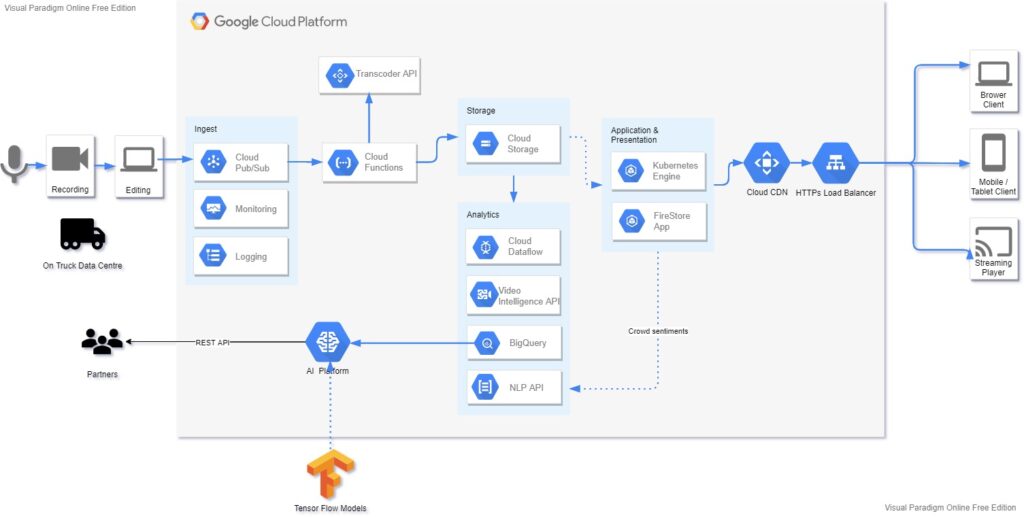

Proposed Solution Diagram

1. Process season-long results (Batch data processing)

Cloud Storage -> Cloud Dataflow -> Big Query -> ML & AI

ML & AI options: Big Query ML / AI Platform

Solution Description

To process HRL season long data, the raw video files are streamed from ‘On Truck data centers’ via Cloud Pub/Sub in real-time. It triggers Transcoder API based on Cloud Functions trigger. The Transcoder converts the video files into different formats (resolutions, FPS) suitable for different end user devices. Thereafter these processed videos are stored in Cloud Storage bucket for long term storage.

The presentation layer i.e website or mobile app fetches the video content from Cloud Storage and then delivers to end users. The Content Delivery Network (Cloud CDN) caches the data to reduce latency and servers the content closest to the user location. HTTP Load Balancer ensures maximum availability of services to users across the world.

For analytics and predictions capability, the videos are initially fed into Cloud DataFlow pipelines for pre-processing. Further it also uses Video Intelligence API to extract useful information from the videos. Natural Language Processing API is helpful in analysing the crowd sentiments based on user comments etc. All this analytical data is stored in BigQuery for generating analytical insights. Thereafter AI Platform (now Vertex AI) delivers useful predictions using the TensorFlow ML modules, based on analytical data available in BigQuery.

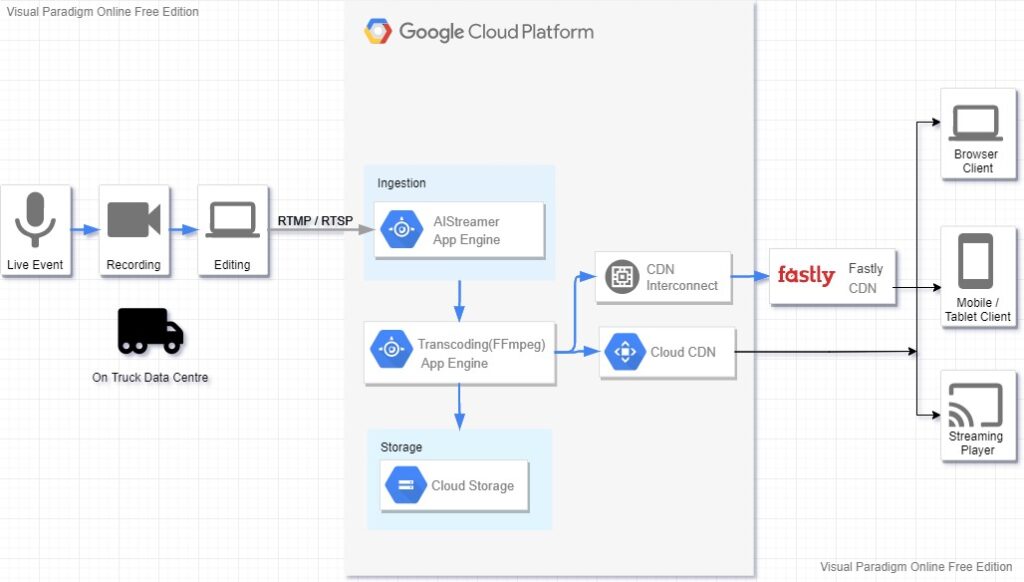

2. Real time video streaming

Truck mounted data Centre -> AIStreamer Ingestion Library -> Transcoding -> Cloud CDN -> Users

Solution Description

For real-time video streaming, the AIStreamer ingestion pipeline receives the video streams from ‘On Truck data center’. It directly feeds the stream to Transcoder function running on App Engine for video format conversions. The Cloud CDN thereafter delivers the video content to the end user devices.

Beta Feature:

The Video Intelligence Streaming API supports standard live streaming protocols like RTSP, RTMP, and HLS. The AIStreamer ingestion pipeline behaves as a streaming proxy. To support live streaming protocols, the Video Intelligence API uses the GStreamer open media framework.

Further Reading – TerramEarth case study solution.

Blog

Sample Case Questions

- HRL is looking for a cost-effective approach for storing their race data such as telemetry. They want to keep all historical records, train models using only the previous season’s data, and plan for data growth in terms of volume and information collected. You need to propose a data solution. Considering HRL business requirements and the goals expressed by CEO S. Hawke, what should you do?

- HRL wants to expand its use of managed AI and ML services to facilitate race predictions. Currently, race predictions are performed using TensorFlow running on VMs in the current public cloud provider. Which GCP Services could host HRL TensorFlow models in a fully managed way?

- HRL wants to migrate their existing cloud service to the GCP platform with solutions that allow them to use and analyze video of the races both in real-time and recorded for broadcasting, on-demand archive, forecasts, and deeper insights. During a race filming, how can you manage both live playbacks of the video and live annotations so that they are immediately accessible to users without coding?

Checkout 200+ practice exam questions here – Google Professional Cloud Architect Certification – Practice Exam

Hope you find this case study solution useful for PCA certification examination preparation.

Written exclusively for ReviewNPrep.com – By Manoj P. (Connect with me on LinkedIn).